Introduction: Why Look Inside AI Models?

Imagine trying to fix a complex machine without being able to open it up and look inside. That's the challenge we face with modern AI systems, they're incredibly powerful, but often operate as "black boxes." Structural analysis is our toolkit for opening these boxes and understanding what's happening inside.

This blog post is highly inspired by Stanford University's Natural Language Understanding course lectures available at Stanford XCS224U: NLU.

While traditional evaluation methods focus on what AI models output (behavioral analysis), structural analysis examines how they actually work internally. It's like the difference between judging a car by its speed versus understanding its engine mechanics.

Why This Matters

Beyond the Black Box: Traditional evaluation only shows us inputs and outputs, missing the crucial "how" and "why" of AI decisions.

Finding Root Causes: When AI systems make mistakes or show bias, structural analysis helps identify the underlying reasons rather than just the symptoms.

Targeted Improvements: Understanding internal mechanisms enables precise model improvements rather than trial-and-error fixes.

Safety & Trust: As AI systems become more powerful, understanding their inner workings becomes crucial for ensuring reliability and alignment with human values.

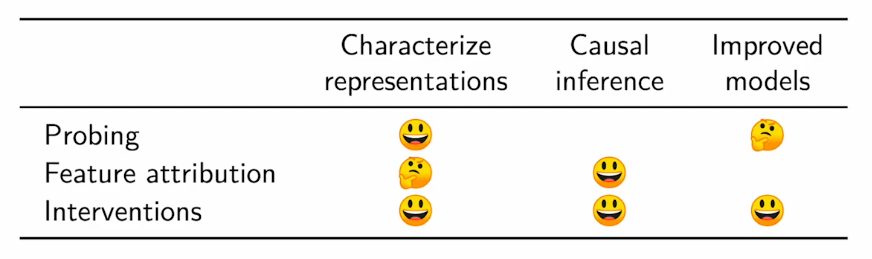

The Three Pillars of Structural Analysis

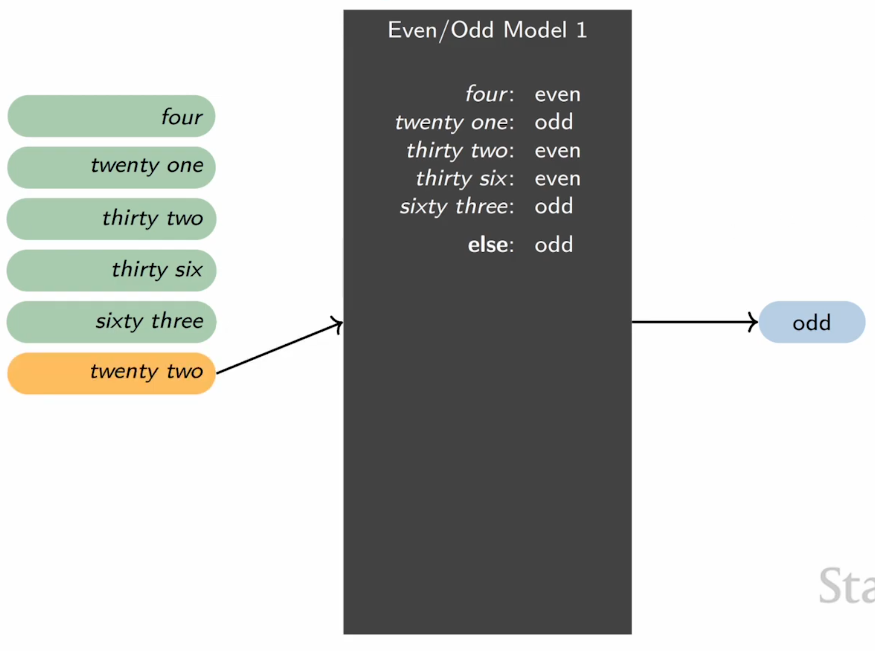

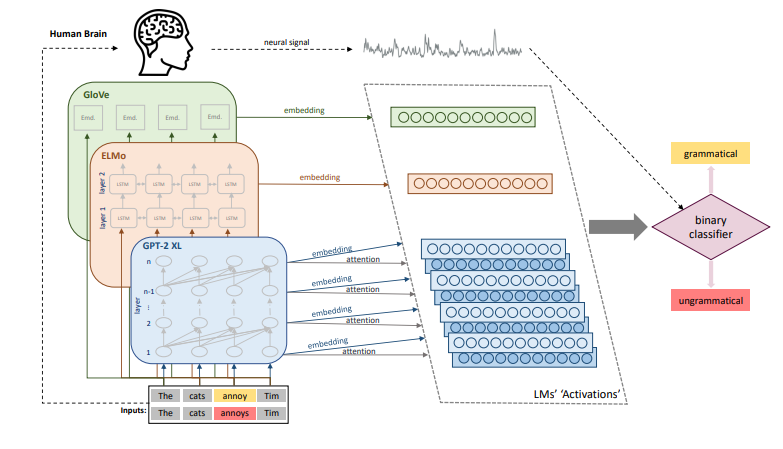

1. Probing: The AI X-Ray

Think of probing as taking X-rays of different parts of a neural network. Just as medical X-rays reveal bone structure, probes reveal what information is encoded in different parts of the model.

How It Works

Linear Probes: Simple classifiers that test if specific information (like syntax or semantics) can be extracted from layer activations.

- Like basic X-rays showing bone structure

- Quick and interpretable results

- May miss complex patterns

Non-linear Probes: More sophisticated classifiers that can detect complex patterns.

- Like advanced imaging (CT/MRI) showing detailed tissue structure

- Can find subtle patterns

- More computationally intensive

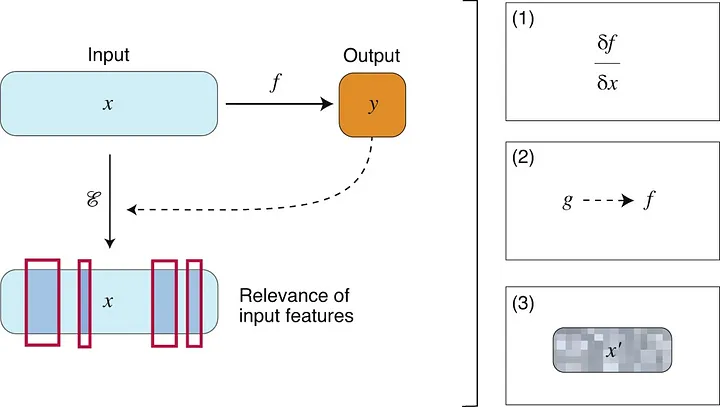

2. Feature Attribution: Following the Decision Trail

Feature attribution is like tracing a detective's investigation - it reveals which parts of the input were most important for the final decision.

Key Methods

Saliency Maps: Highlight which input elements (words, pixels) most influenced the output.

- Like highlighting key evidence in a case

- Visual and intuitive

- Can sometimes be noisy

Attention Analysis: Shows how the model weighs different parts of the input.

- Reveals what the model "focuses" on

- Particularly useful for transformer models

- Helps understand information flow

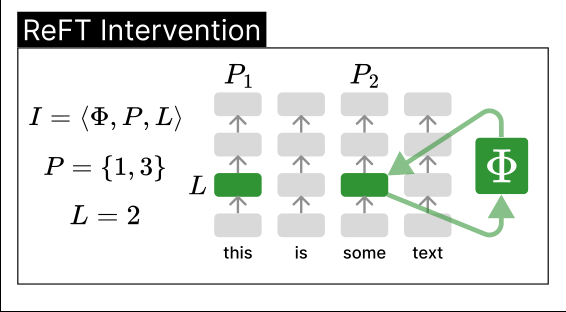

3. Interventions: AI Surgery

Like a neurosurgeon studying brain function by carefully modifying specific regions, interventions involve precisely changing parts of the network to understand their role.

Common Techniques

Ablation Studies: Temporarily disabling specific components to see their impact.

- Like studying brain function by temporarily deactivating regions

- Clear cause-effect relationships

- Can reveal redundancy and critical components

Activation Editing: Modifying specific activations to change model behavior.

- Precise control over model internals

- Can test hypotheses about learned representations

- Useful for understanding and fixing biases

The Challenges: Why This Is Hard

Understanding AI internals faces several fundamental challenges:

1. Scale and Complexity

- Modern models have billions of parameters

- Attention mechanisms scale quadratically

- Analyzing all interactions is computationally intractable

2. Distributed Knowledge

- Information is spread across many neurons

- No single "grandmother cell" for concepts

- Features combine in complex ways

3. Non-linear Behavior

- Multiple layers create complex transformations

- Small changes can have large effects

- Traditional linear analysis tools often fail

4. Causal Confusion

- Hard to isolate individual effects

- Networks can compensate for changes

- Multiple paths to the same output

Looking Forward: The Future of AI Interpretability

As AI systems become more powerful and ubiquitous, understanding their inner workings becomes increasingly crucial. The field of structural analysis is evolving rapidly, with new tools and techniques emerging regularly.

Key Developments to Watch

- Automated Analysis Tools: Making interpretability more accessible

- Real-time Monitoring: Understanding AI decisions as they happen

- Standardized Methods: Creating common frameworks for analysis

Citation

Transformer, Vi. (Feb 2025). "Peeking Inside the AI Brain". 16x16 Words of Wisdom. https://vitransformer.netlify.app/posts/peeking-inside-the-ai-brain/

Or

@article{vit2025llmstructure,

title = "Peeking Inside the AI Brain",

author = "Transformer, Vi",

journal = "16x16 Words of Wisdom",

year = "2025",

month = "Feb",

url = "https://vitransformer.netlify.app/posts/peeking-inside-the-ai-brain/"

}References

- Elhage, N., Hume, T., Olsson, C., Schiefer, N., Henighan, T., Kravec, S., Hatfield-Dodds, Z., Lasenby, R., Drain, D., Chen, C., Grosse, R., McCandlish, S., Kaplan, J., Amodei, D., Wattenberg, M., & Olah, C. (2022). Toy Models of Superposition. arXiv preprint arXiv:2209.10652.

- Varma, G. (2021). Feature Attribution in Explainable AI. Geek Culture. https://medium.com/geekculture/feature-attribution-in-explainable-ai-626f0a1d95e2

- Stanford University. (n.d.). XCS224U: Natural Language Understanding. [Video playlist]. YouTube. https://www.youtube.com/watch?v=K_Dh0Sxujuc&list=PLoROMvodv4rOwvldxftJTmoR3kRcWkJBp