Why Explainable AI Matters More Than Ever

Imagine applying for a loan and being denied not by a human, but by an algorithm that offers no explanation. This scenario highlights the growing need for Explainable AI (XAI) in our increasingly automated world. As AI systems make critical decisions in healthcare, finance, hiring, and criminal justice, their "black box" nature raises urgent questions: How do we trust systems we can’t understand?

XAI isn’t just a technical buzzword. It’s a bridge between human intuition and machine logic. By making AI decisions transparent and interpretable, XAI builds user trust, ensures compliance with regulations (like GDPR’s "right to explanation"), and even improves system performance by exposing hidden flaws. For instance, a biased hiring algorithm could perpetuate discrimination if its reasoning isn’t scrutinized.

Demystifying XAI: Key Concepts You Need to Know

To grasp XAI, start with its foundational pillars:

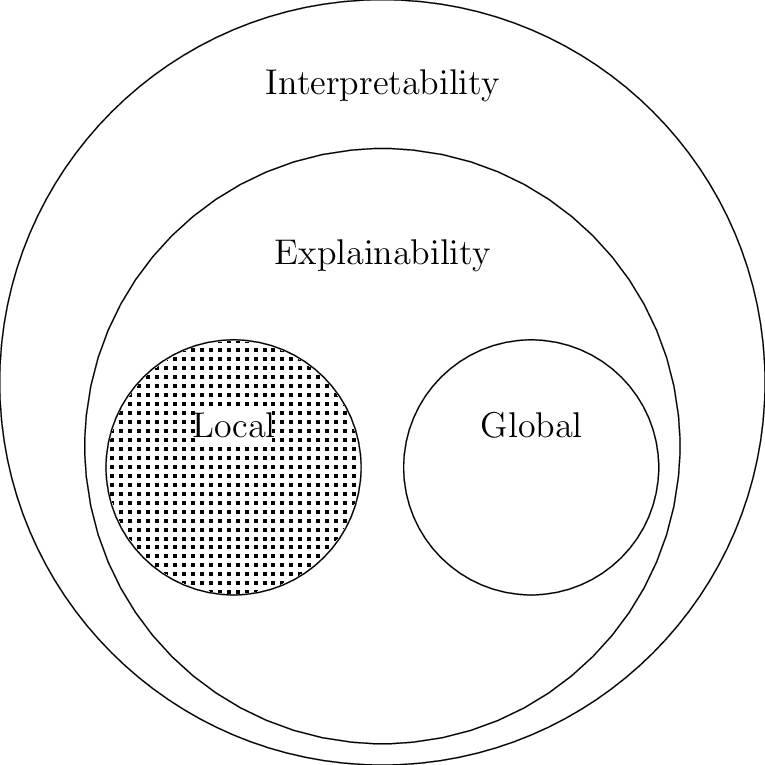

Interpretability vs. Explainability

- Interpretability means understanding how a model works (e.g., a decision tree showing step-by-step logic for a medical diagnosis).

- Explainability digs deeper into why a decision was made (e.g., highlighting which features led an AI to flag a tumor as malignant).

Transparency

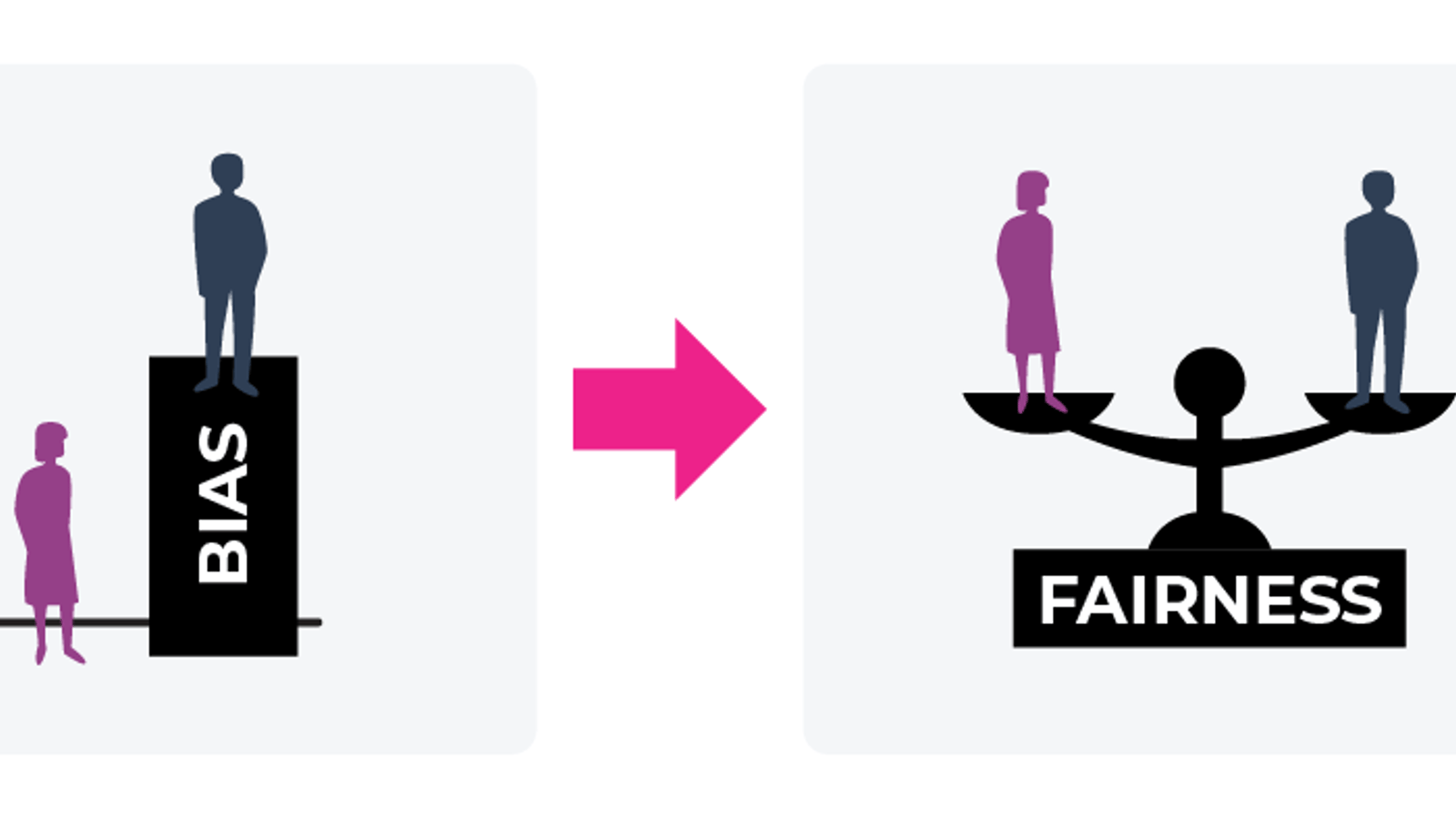

Transparent models, like linear regression, openly reveal their inner workings. Think of it as a glass box: you can see every weight, bias, and data point influencing predictions.Fairness

Fair AI avoids amplifying biases. For example, a predictive policing tool trained on historically biased crime data might unfairly target marginalized communities unless XAI techniques detect and correct this.

These concepts are intertwined. Transparency enables interpretability, which fuels explainability, while fairness ensures ethical outcomes. Together, they form the backbone of AI systems that are not just smart, but accountable.

Takeaway: XAI isn’t about dumbing down AI but about empowering humans to collaborate with machines responsibly.

The Balancing Act: XAI’s Core Challenges

AI’s greatest strength, which is complexity, is also its biggest hurdle. Here’s what you need to know about the tightrope walk of Explainable AI:

The Black Box Problem

Models like neural networks and LLMs make powerful predictions, but their decision-making is opaque. This lack of clarity undermines:

- Trust: Would you accept a cancer diagnosis from an AI that can’t explain why?

- Accountability: How can we debug errors or fix biases if we don’t know where they originate?

- Compliance: Regulations like GDPR demand explanations for automated decisions (e.g., loan denials).

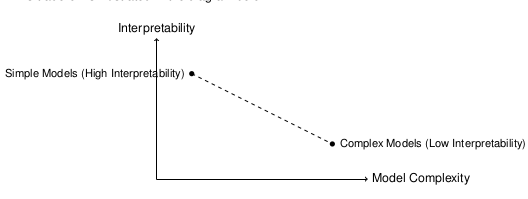

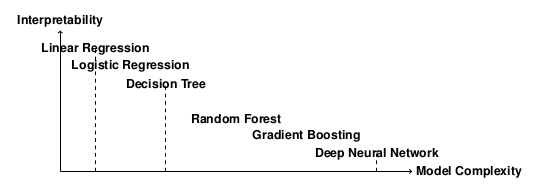

The Trade-Off: Simplicity vs. Power

- Simple models (linear regression, decision trees) are transparent but lack nuance.

- Complex models (LLMs, deep learning) excel at predictions but act as “black boxes.”

The sweet spot? One way can be a Hybrid approaches that pairs complex models with post-hoc explanation tools (like SHAP or LIME) to retain accuracy and clarity.

Key Roadblocks

- Scale: Billions of parameters in modern AI make tracing decisions impossible.

- Ambiguity: A “good explanation” varies by audience (data scientists vs. doctors).

- Over-Simplification: Stripping complexity for interpretability can cripple performance.

Two Paths to Clarity

- Intrinsic Interpretability: Use transparent-by-design models (e.g., decision trees) where possible.

- Post-Hoc Explainability: Apply tools like SHAP to decode black-box decisions retroactively (e.g., “This loan was denied due to low income and high debt”).

Peeking Inside the Black Box: Interpreting Deep Learning Models

Deep learning models like CNNs, RNNs, and Transformers drive cutting-edge AI applications, but their complexity makes them notoriously opaque. Here’s how researchers are untangling their inner workings and exploring why it matters.

Why Deep Learning Feels Like a Black Box

Deep learning’s power comes at a cost:

- Complexity: Models like CNNs and Transformers use millions (or billions) of parameters. Tracking how each contributes to a decision is like mapping every neuron in a human brain.

- Non-Linearity: Layers of activation functions (e.g., ReLU) create abstract patterns that are hard to reverse-engineer (e.g., “Why did this neuron activate for cat ears?”).

- No Built-In Rules: Unlike decision trees, deep models lack explicit logic. Decisions emerge from tangled, distributed patterns in the data.

- High Dimensionality: Models transform inputs (like images or text) into abstract features that defy human intuition (e.g., “This pixel cluster represents a tumor”).

Decoding CNNs: From Edges to Objects

CNNs excel at vision tasks but hide their logic in layers:

- Early Layers detect edges, textures, and simple shapes. For example, a CNN classifying cat images might focus on whiskers or ear contours.

- Deeper Layers recognize complex patterns (like eyes or fur), but these features become too abstract to interpret visually.

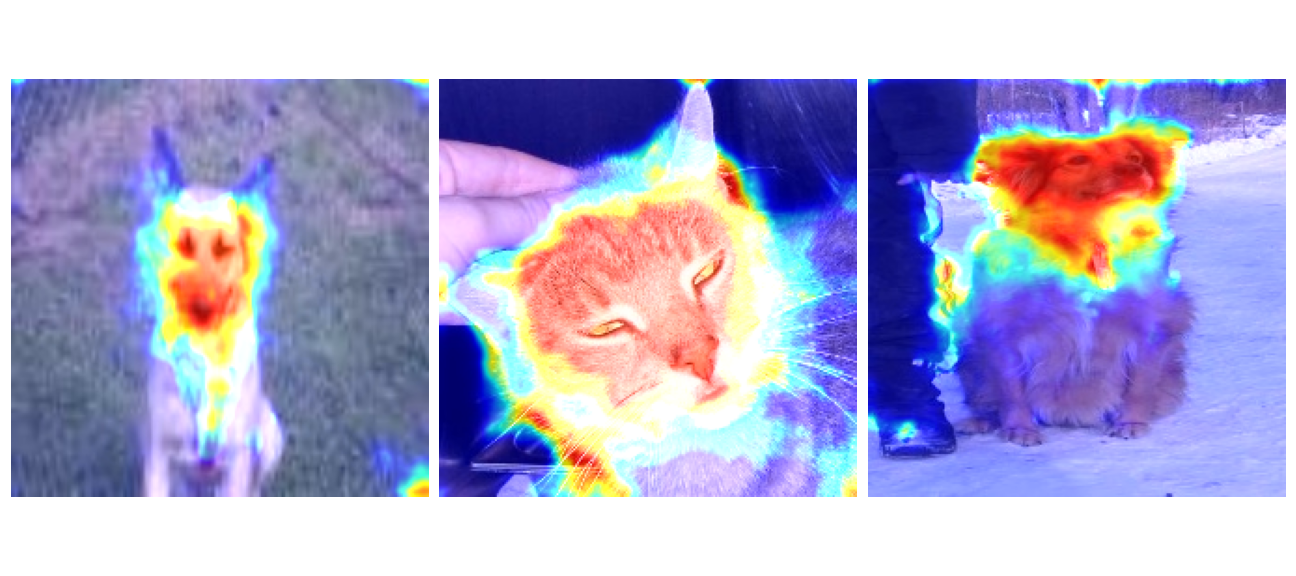

The Fix: Tools like Grad-CAM highlight regions in an image that drive predictions. For instance, a medical CNN might focus on irregular tumor borders to diagnose cancer.

Unraveling RNNs: Time, Memory, and Attention

RNNs process sequences (text, sensor data) by updating hidden states over time. But what’s in those states?

- Hidden State Visualizations: Plotting activations shows how units respond to inputs. In a sine wave prediction model, some units activate rhythmically, mirroring the wave’s pattern.

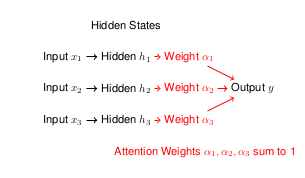

- Attention Mechanisms: Assign weights to key inputs. For example, in sentiment analysis, the model might focus on the word “not” to predict negativity.

Real-World Impact: In fraud detection, attention maps reveal which transaction patterns triggered an alert.

Transformers & Self-Attention: The Language of Focus

Transformers use self-attention to weigh relationships between words:

- Heatmaps expose which inputs matter most. For example, in translation, the model might link “bank” to “river” in one context and “money” in another.

- Multi-Head Attention: Different heads track diverse patterns (e.g., grammar vs. entities).

Why It Matters: In medical NLP, attention heatmaps can show how a model links symptoms to diagnoses in patient notes.

The Interpretability Tightrope

While tools like Grad-CAM and attention maps help, deep learning’s opacity remains a hurdle:

- Power vs. Clarity: The smarter the model, the harder to explain.

- Audience Matters: Engineers need technical insights (e.g., feature importance), while end-users need plain-language reasoning (e.g., “Your loan was denied due to debt-to-income ratio”).

Takeaway: Interpretability isn’t about perfect explanations but about building context-aware tools that enable seamless integration of AI-driven insights with human decision-making processes.

Categories of XAI Techniques

XAI methods are broadly categorized based on two factors:

Model Interpretability:

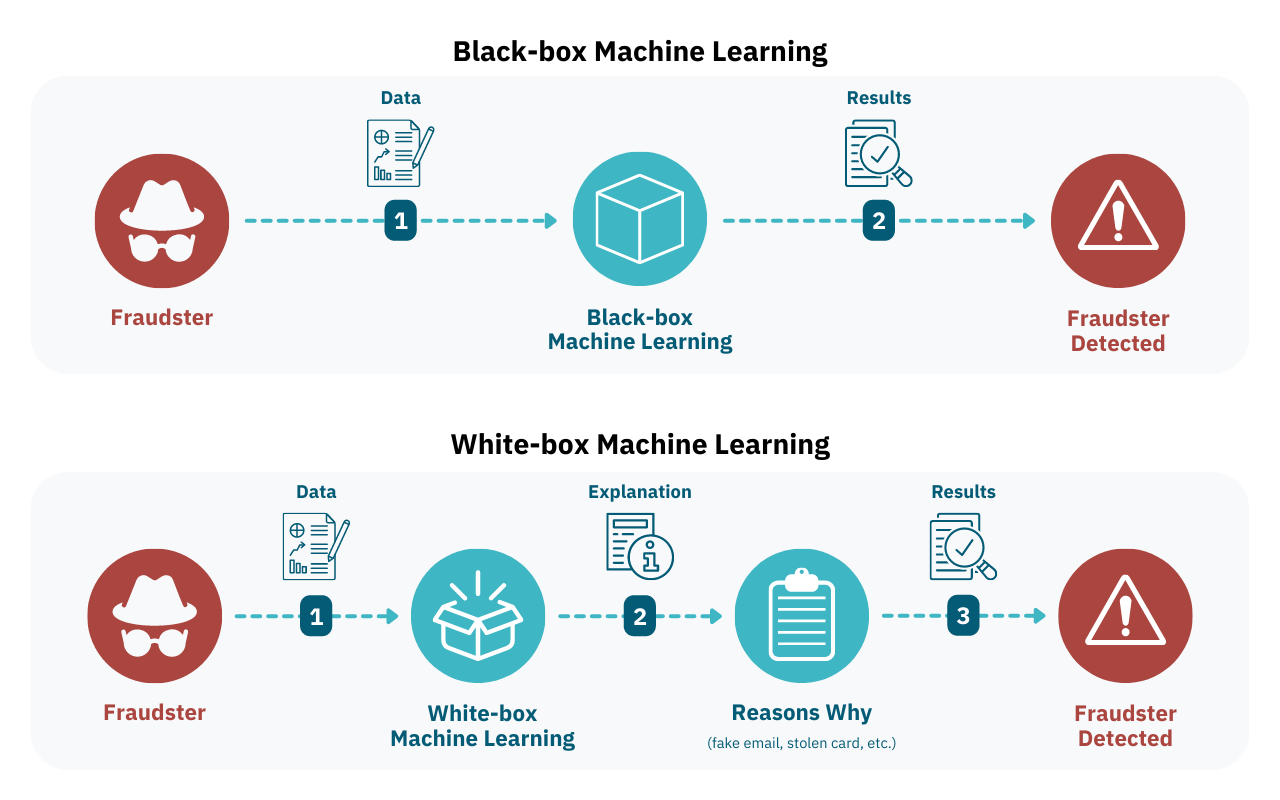

- White-box models: Inherently interpretable (e.g., Decision Trees).

- Black-box models: Require external tools for explanation (e.g., Deep Learning).

Approach:

- Model-based techniques

- Post-hoc interpretation

- Counterfactual explanations

- Causal inference

- Graph-based explanations

- Multimodal explainability

White-box vs. Black-box Models

White-box Models

These models are transparent by design, making their decision logic easy to interpret. Examples include:

- Linear/Logistic Regression: Uses coefficients to show feature importance.

- Decision Trees: Follows a flowchart-like structure for decisions.

- Rule-based Systems: Relies on predefined "if-then" rules.

- K-Nearest Neighbors (KNN): Classifies based on similarity to labeled examples.

- Generalized Additive Models (GAMs): Combines interpretability with flexibility.

Why use them? Ideal for high-stakes domains like healthcare or finance where transparency is critical.

Black-box Models

These models are complex and lack inherent interpretability. Examples include:

- Neural Networks: Deep learning models with hidden layers.

- Support Vector Machines (SVMs): Uses complex boundaries for classification.

- Ensemble Methods (e.g., Random Forests): Combines multiple models for accuracy.

- Transformers (e.g., BERT, GPT): Advanced architectures for NLP tasks.

Why use them? Superior performance in tasks like image recognition or language processing. However, they require post-hoc techniques to explain their outputs.

Model-based Techniques

These methods build inherently interpretable models:

- Decision Trees: Visual splits based on feature thresholds.

- Attention Mechanisms: Highlights parts of input data a model "focuses on" (e.g., in text or images).

- Self-Explaining Neural Networks: Designed to generate explanations alongside predictions.

- Concept Bottleneck Models: Maps inputs to human-understandable concepts (e.g., "wheel" or "door" in image recognition).

Use case: Healthcare diagnostics, where understanding why a model predicts a disease is as important as the prediction itself.

Post-hoc Interpretation Techniques

Applied after a model makes predictions to explain its behavior:

- LIME: Creates local approximations of complex models.

- SHAP: Uses game theory to quantify feature contributions.

- Feature Importance Scores: Ranks features by their impact on predictions.

- Saliency Maps: Visualizes input regions influencing outputs (common in computer vision).

Example: Explaining why a credit-scoring model denied a loan by highlighting income and debt ratio as key factors.

Counterfactual Explanations

Answers the question: "What changes would alter the model’s decision?"

- Generates "what-if" scenarios (e.g., "Your loan would be approved if your income increased by $10k").

- Useful for actionable insights in fairness auditing or user recommendations.

Causal Inference Techniques

Identifies cause-effect relationships in data, going beyond correlations:

- Causal Graphs: Maps how variables influence each other.

- Do-Calculus: Tests interventions (e.g., "Does medication X cause recovery?").

Use case: Evaluating policy changes or medical treatments.

Graph-based Explanation Techniques

Explains relationships in graph-structured data (e.g., social networks, molecular structures):

- Graph Neural Networks (GNNs): Highlights influential nodes/edges.

- Subgraph Extraction: Identifies critical components in a larger graph.

Example: Explaining fraud detection in financial transaction networks.

Multimodal Explainability

Combines explanations across data types (text, images, audio):

- Visual Question Answering (VQA): Explains how a model answers "Why is the dog barking?" by linking visual and textual data.

- Cross-modal Attention: Shows how a model aligns text and images (e.g., in captions).

Thanks for reading! This overview aimed to demystify Explainable AI (XAI) by exploring its why and how. As AI’s role expands, understanding its decisions isn’t optional, it’s the key to a future where machines empower, not alienate.

Citation

Cited as:

Transformer, Vi. (Jan 2025). "XAI Overview". 16x16 Words of Wisdom. https://vitransformer.netlify.app/posts/xai-overview/

Or

@article{vit2025xai,

title = "XAI Overview",

author = "Transformer, Vi",

journal = "16x16 Words of Wisdom",

year = "2025",

month = "Jan",

url = "https://vitransformer.netlify.app/posts/xai-overview/"

}References

- Hsieh, W., Bi, Z., Jiang, C., et al. (2024). A Comprehensive Guide to Explainable AI: From Classical Models to LLMs. arXiv preprint arXiv:2412.00800. https://arxiv.org/abs/2412.00800

- Monteiro, W. R., & Reynoso-Meza, G. "Explaining black-box classification and regression models with counterfactuals using multi-objective constrained optimization." (2020). DOI: 10.13140/RG.2.2.30680.52480

- Reiff, D. (2021). "Understand Your Algorithm with Grad-CAM." Towards Data Science. https://towardsdatascience.com/understand-your-algorithm-with-grad-cam-d3b62fce353

- Vig, J. (2019). "Deconstructing BERT, Part 2: Visualizing the Inner Workings of Attention." Towards Data Science. https://towardsdatascience.com/deconstructing-bert-part-2-visualizing-the-inner-workings-of-attention-60a16d86b5c1